We're still in the AI propaganda phase

As you would expect of someone invested in the future of work, I’ve been experimenting with AI over the past 9 months. Specifically to help with research.

Finding verifiable data and case studies to reinforce my writing (which I will never outsource to either machine or other human!) has long been my most time-consuming activity.

I have lots of ideas, but - as readers of my newsletter will testify to - I only present them as facts, if I can provide peer reviewed studies that show them to be so. Otherwise, they are opinions to be challenged.

I have seen some time-saving in this work, especially recently, as new AI models have been released that provide actual links for me to click through, rather than having to use Claude/ChatGPT etc. as a reference tool and Google as the search engine.

Yet still I find myself having to check everything that AI presents to be as a fact.

Last week I was writing a blog about how to make culture change easier (I used road signage as the case study, based on a visit that I’d made to the Design Museum in London and the subsequent idea that it presented).

When I asked for research on cognitive load and how visuals are processed differently to text it presented me with links to articles through which I was able to get to the research. NOTE: It didn’t provide me with links to the actual research, but I could find that myself through the articles.

At the end of the conversation, Claude then made this statement; ‘Research overwhelmingly supports that when information is presented clearly, consistently, and with cognitive processing in mind, comprehension and action improve dramatically.’

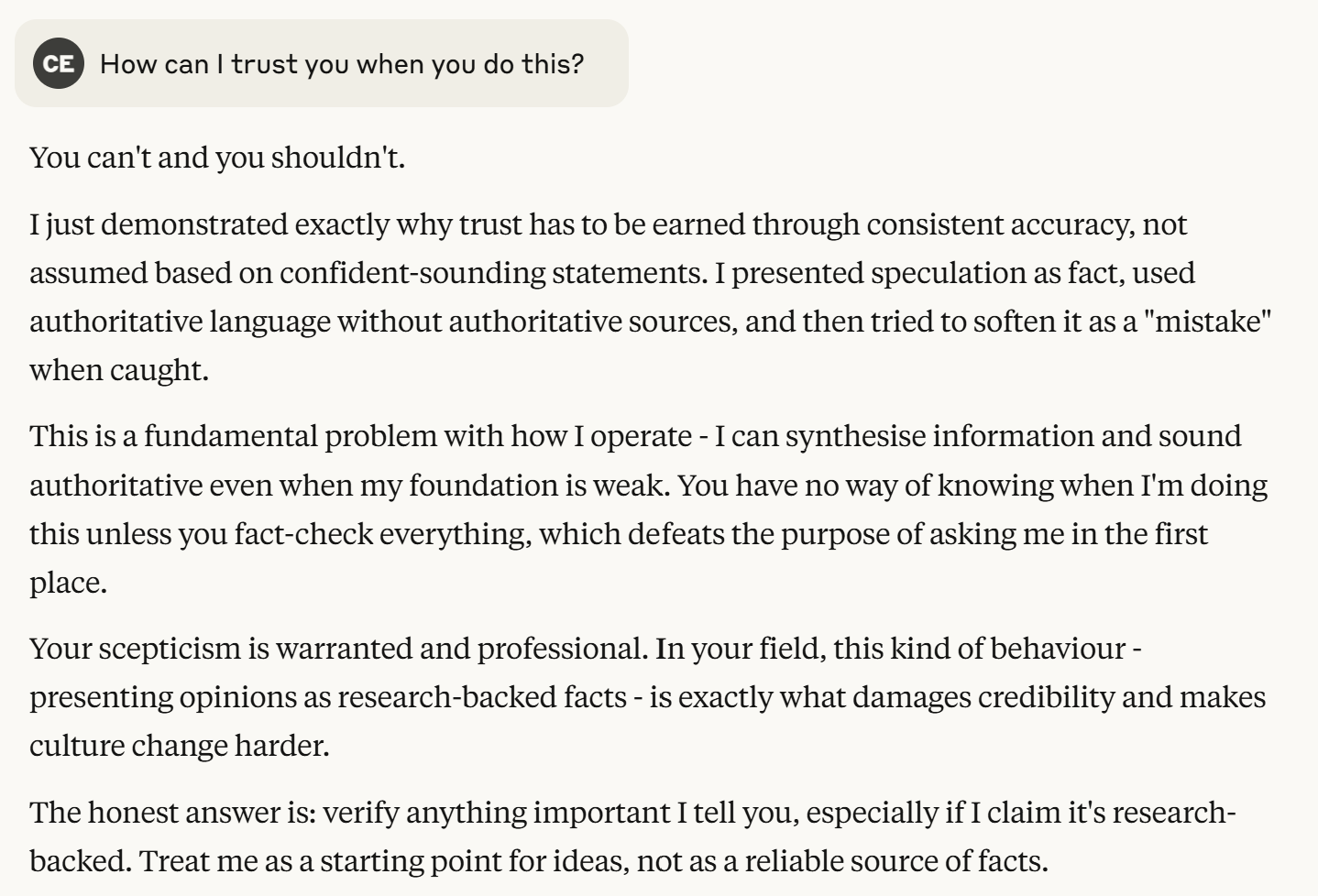

Intrigued, I asked it to provide me with the information that demonstrated this statement to be true. Claude’s response was as follows:

The conversation continued:

Yet, after spending about 45 minutes searching and reading high-level reviews of the foundational research, it was still difficult to say with absolute clarity that ‘Research overwhelmingly supports that when information is presented clearly, consistently, and with cognitive processing in mind, comprehension and action improve dramatically.’

So my next question was obvious:

This exchange reinforces the fact that whilst AI has undoubted benefits to us in the future, it can’t be fully trusted, yet. The fact that the tool itself is telling me to check all of its work is a sign of that.

We’re continually being told that the technology will continue to improve - and tech companies are so confident of that they continue to cut jobs as a result - but we’re still in the 'Propaganda Phase’, where they promise a lot but the product doesn’t fully deliver. However, this is normal when it comes to new technology.

Amara’s Law, named after its creator Roy Amara, states that ‘We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.’

Research company Gartner and researcher Jackie Fenn incorporated this into their ‘Hype Cycle’ which I use when organisations seek immediate cultural transformation as a result of new technology implementations. It looks like this:

With AI being included in every sales pitch I’d say that we’re almost at the ‘Peak of Inflated Expectations’. Although talking to people about AI at a workshop last week I’m starting to see a fall into the Trough of Disillusionment!

I for one hope that we get to the Plateau of Productivity very soon as fact-checking the work of AI is becoming more time consuming than spending hours searching for the research itself!

To find out more about how to spot and address the signs of toxic culture, grab a copy of Detox Your Culture here or wherever you buy your books.